User guide¶

Architecture¶

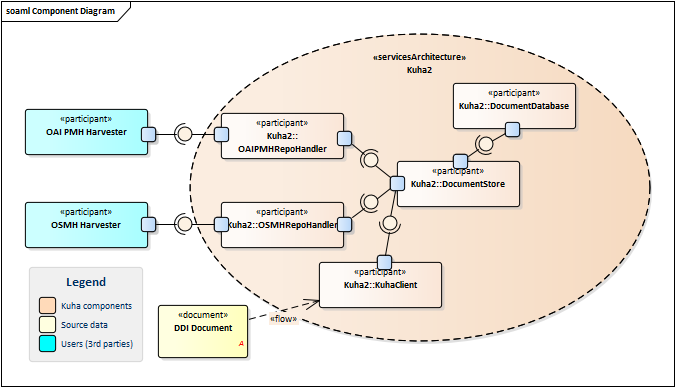

Fig. Kuha2 service architecture diagram.

In a typical usage scenario, the repository owner submits DDI files to the Document Store using Kuha Client. Document Store then stores the metadata into a database. Repository handlers implement different harvesting protocols such as OAI-PMH and query the Document Store accordingly. Only repository handlers should be exposed to external use (that is, to harvesters). If access control or traffic shaping is required, Kuha2 can be deployed behind an API gateway or some other proxy.

Kuha2 components communicate to each other through RESTful APIs and the use of the Document Store is not restricted to DDI. It is possible to bypass Kuha Client and use the Document Store API directly to submit metadata to the Store.

Getting started¶

Once the installation is complete, you may wish to populate Document Store with some example data in order to see how the software works. This guide will demostrate how to populate Document Store with example data. The guide assumes all Kuha2 software components are installed on localhost and using default configuration values.

Also refer to OAI-PMH documentation and OSMH documentation for information about the protocols.

Note

The commands in this guide may change in future versions of Kuha Client. Refer to Kuha Client documentation for current commands and configuration parameters.

Importing metadata¶

Import Study in DDI 1.2.2. to

create a single Study with study number study_1 and a single StudyGroup with identifier serie_1.

The Study and StudyGroup contain some localized content marked with xml:lang attributes.

Import the XML metadata to Document Store

python -m kuha_client.kuha_import --document-store-url=http://localhost:6001/v0 --source-file-type=ddi_122_nesstar ddi122_minimal_study.xml

The metadata is now available via OAI-PMH.

curl "http://localhost:6003/v0/oai?verb=GetRecord&metadataPrefix=ddi_c&identifier=study_1"

And OSMH.

curl "http://localhost:6002/v0/GetRecord/Study/study_1"

curl "http://localhost:6002/v0/GetRecord/StudyGroup/serie_1"

Study in DDI 2.5. is a similar example, but

serialized in DDI 2.5. It creates a single Study with study number study_2 and a single StudyGroup with

identifier serie_2. The Study and StudyGroup also contain some localized content.

Import the XML metadata to Document Store.

python -m kuha_client.kuha_import --document-store-url=http://localhost:6001/v0 --source-file-type=ddi_c ddi25_minimal_study.xml

The metadata is now available via OAI-PMH.

curl "http://localhost:6003/v0/oai?verb=GetRecord&metadataPrefix=ddi_c&identifier=study_2"

And OSMH.

curl "http://localhost:6002/v0/GetRecord/Study/study_2"

curl "http://localhost:6002/v0/GetRecord/StudyGroup/serie_2"

Variables in DDI 2.5. contains a Study

with three Variables and Questions.

Import the metadata.

python -m kuha_client.kuha_import --document-store-url=http://localhost:6001/v0 --source-file-type=ddi_c ddi25_minimal_variables.xml

See it in OAI-PMH.

curl "http://localhost:6003/v0/oai?verb=GetRecord&metadataPrefix=ddi_c&identifier=study_3"

And in OSMH.

curl "http://localhost:6002/v0/GetRecord/Study/study_3"

curl "http://localhost:6002/v0/GetRecord/Variable/study_3:VAR_1"

curl "http://localhost:6002/v0/GetRecord/Variable/study_3:VAR_2"

curl "http://localhost:6002/v0/GetRecord/Variable/study_3:VAR_3"

curl "http://localhost:6002/v0/GetRecord/Question/study_3:QUESTION_1"

curl "http://localhost:6002/v0/GetRecord/Question/study_3:QUESTION_2"

curl "http://localhost:6002/v0/GetRecord/Question/study_3:QUESTION_3"

Updating metadata¶

To keep the Document Store up-to-date with your DDI metadata, Kuha Client

provides a kuha_upsert -module. The use is similar to the kuha_import module,

except that upsert provides an optional command line parameter which instructs the

client to remove records that are not found in current run. This is used in

batch operations, when you wish to sync a directory full of DDI-files to Document Store.

Updated Study in DDI 2.5.

has the same study number study_2, so it will update the already imported study.

This file contains a new distributor (distrbtr-element) and has removed the elements

referring to secondary study titles (partitl-elements).

To update the Study to Document Store.

python -m kuha_client.kuha_upsert --document-store-url=http://localhost:6001/v0 --source-file-type=ddi_c ddi25_minimal_study_updated.xml

See the updated study in OAI-PMH.

curl "http://localhost:6003/v0/oai?verb=GetRecord&metadataPrefix=ddi_c&identifier=study_2"

And OSMH.

curl "http://localhost:6002/v0/GetRecord/Study/study_2"

If you run the upsert command with the command line option --remove-absent, the other documents

imported earlier will be removed, since they are not found from the DDI file.

First assure that the documents imported earlier are still served via OAI-PMH

curl "http://localhost:6003/v0/oai?verb=ListIdentifiers&metadataPrefix=ddi_c"

Now run the upsert command with --remove-absent.

python -m kuha_client.kuha_upsert --remove-absent --document-store-url=http://localhost:6001/v0 --source-file-type=ddi_c ddi25_minimal_study_updated.xml

Then look at ListIdentifiers again.

curl "http://localhost:6003/v0/oai?verb=ListIdentifiers&metadataPrefix=ddi_c"

ListIdentifiers should only return the a single study_2.

Deleting all records¶

To remove the example data from Document Store you can issue a delete command with Kuha Client, which will delete all documents from all collections.

python -m kuha_client.kuha_delete --document-store-url=http://localhost:6001/v0 ALL ALL