User guide

Architecture

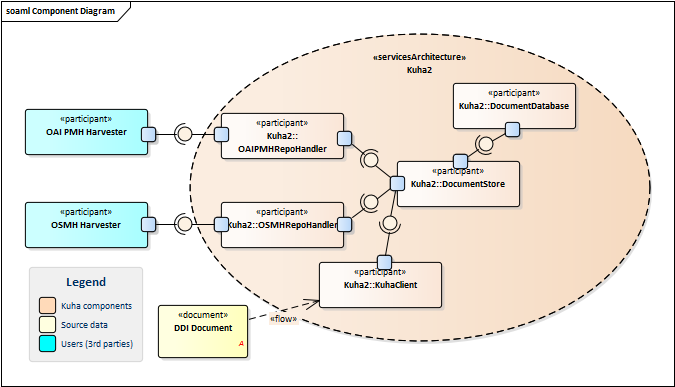

Fig. Kuha2 service architecture diagram.

In a typical usage scenario, the repository owner submits DDI files to the Document Store using Kuha Client. Document Store then stores the metadata into a database. Repository handlers implement different harvesting protocols such as OAI-PMH and query the Document Store accordingly. Only repository handlers should be exposed to external use (that is, to harvesters). If access control or traffic shaping is required, Kuha2 can be deployed behind an API gateway or some other proxy.

Kuha2 components communicate to each other through RESTful APIs and the use of the Document Store is not restricted to DDI. It is possible to bypass Kuha Client and use the Document Store API directly to submit metadata to the Store.

Getting started

Once the installation is complete, you may wish to populate Document Store with some example data in order to see how the software works. This guide will demostrate how to populate Document Store with example data. The guide assumes all Kuha2 software components are installed on localhost and using default configuration values.

Also refer to OAI-PMH documentation and OSMH documentation for information about the protocols.

Note

The commands in this guide may change in future versions of Kuha Client. Refer to Kuha Client documentation for current commands and configuration parameters.

Synchronizing metadata

Kuha Client is used to keep Document Store up to date with metadata

records stored in a filesystem. It provides a command line interface

kuha_sync which updates records to Document Store. The process

assumes that every run contains the whole batch of records that needs

to be available from Kuha. In other words, every run deletes all

records that were not found from the processed metadata records. This

behaviour can be changed via configuration.

Import Study in DDI

1.2.2. to create a single

Study with study number study_1 and a single StudyGroup with

identifier serie_1. The Study and StudyGroup contain some

localized content marked with xml:lang attributes.

Import the XML metadata to Document Store

kuha_sync --document-store-url=http://localhost:6001/v0 ddi122_minimal_study.xml

The metadata is now available via OAI-PMH.

curl "http://localhost:6003/v0/oai?verb=GetRecord&metadataPrefix=ddi_c&identifier=study_1"

And OSMH.

curl "http://localhost:6002/v0/GetRecord/Study/study_1"

curl "http://localhost:6002/v0/GetRecord/StudyGroup/serie_1"

Study in DDI 2.5. is a similar example, but

serialized in DDI 2.5. It creates a single Study with study number study_2 and a single StudyGroup with

identifier serie_2. The Study and StudyGroup also contain some localized content.

Import the XML metadata to Document Store.

kuha_sync --document-store-url=http://localhost:6001/v0 ddi25_minimal_study.xml

The metadata is now available via OAI-PMH.

curl "http://localhost:6003/v0/oai?verb=GetRecord&metadataPrefix=ddi_c&identifier=study_2"

And OSMH.

curl "http://localhost:6002/v0/GetRecord/Study/study_2"

curl "http://localhost:6002/v0/GetRecord/StudyGroup/serie_2"

Variables in DDI 2.5. contains a Study

with three Variables and Questions.

Import the metadata.

kuha_sync --document-store-url=http://localhost:6001/v0 ddi25_minimal_variables.xml

See it in OAI-PMH.

curl "http://localhost:6003/v0/oai?verb=GetRecord&metadataPrefix=ddi_c&identifier=study_3"

And in OSMH.

curl "http://localhost:6002/v0/GetRecord/Study/study_3"

curl "http://localhost:6002/v0/GetRecord/Variable/study_3:VAR_1"

curl "http://localhost:6002/v0/GetRecord/Variable/study_3:VAR_2"

curl "http://localhost:6002/v0/GetRecord/Variable/study_3:VAR_3"

curl "http://localhost:6002/v0/GetRecord/Question/study_3:QUESTION_1"

curl "http://localhost:6002/v0/GetRecord/Question/study_3:QUESTION_2"

curl "http://localhost:6002/v0/GetRecord/Question/study_3:QUESTION_3"

Deleting all records

Kuha Client provides a kuha_delete command line interface to

delete records from Document Store. To delete the example data from

Document Store, you can delete all documents from all collections. By

default, the delete process uses logical deletions, which only marks

records as deleted. Use --delete-type hard to physically remove

all traces of example records from Document Store.

kuha_delete --delete-type hard --document-store-url=http://localhost:6001/v0 ALL ALL

Recommended setup

Store all your metadata records in a directory and keep the records in

this directory up-to-date. For example, let’s assume you create a

directory named xml_sources, and put it in your home directory. The

full path is similar to /home/user/xml_sources.

Run kuha_sync against this folder every time a record is added,

has changes or is removed. You can use file-cache functionality to

speed up consecutive runs. You can also create a cronjob or use

systemd timers to periodically and automatically run the sync-process.

kuha_sync --file-cache /home/user/kuha_sync_cache.pickle /home/user/xml_sources

Note

The file-cache is not invalidated automatically. Remember to remove

the file-cache if you have removed records using kuha_delete, or

you have upgraded Kuha Client, or you have altered the records in

Document Store using some other mechanism than kuha_sync.